MobileNetV2

Last Version: 12/09/2025

Overview

The MobileNetV2 example demonstrates how to classify objects in images or video streams using the SpacemiT AI processor. The inference node outputs the predicted object class as a ROS 2 message.

-

Supported classes: MobileNetV2 can classify 1,000 object types,including people, animals, fruits, and vehicles. Refer to the official COCO labels for a complete list of supported categories.

-

Typical applications: MobileNetV2 can predict the category of a given image and is commonly used in object recognition, digit recognition, image retrieval, and text recognition.

Platform Requirements

SpacemiT RISC-V:

- Pre-flashed with the Bianbu ROS system image

Environment Setup

Install dependencies

sudo apt install python3-opencv ros-humble-cv-bridge ros-humble-camera-info-manager \

ros-humble-image-transport python3-spacemit-ort python3-yaml libyaml-dev python3-numpy

Import ROS 2 Environment

source /opt/bros/humble/setup.bash

Model Configuration Check

Run the following command to list the model configurations currently supported on your system:

ros2 launch br_perception infer_info.launch.py | grep 'classification'

Sample output:

- config/classification/resnet18.yaml

- config/classification/resnet50.yaml

- config/classification/mobilenet_v2.yaml

Example, to use the ResNet18 model, set

config_path:=config/classification/resnet18.yaml

Running Inference on an Image

Copy a test image to your current folder:

cp /opt/bros/humble/share/jobot_infer_py/data/classification/kitten.jpg .

Run inference and print results only

ros2 launch br_perception infer_img.launch.py \

config_path:='config/classification/mobilenet_v2.yaml' \

img_path:='./kitten.jpg'

Sample terminal output:

[INFO] [launch]: Default logging verbosity is set to INFO

[INFO] [infer_img_node-1]: process started with pid [527993]

[infer_img_node-1] class predict: n02124075 Egyptian cat

[INFO] [infer_img_node-1]: process has finished cleanly [pid 527993]

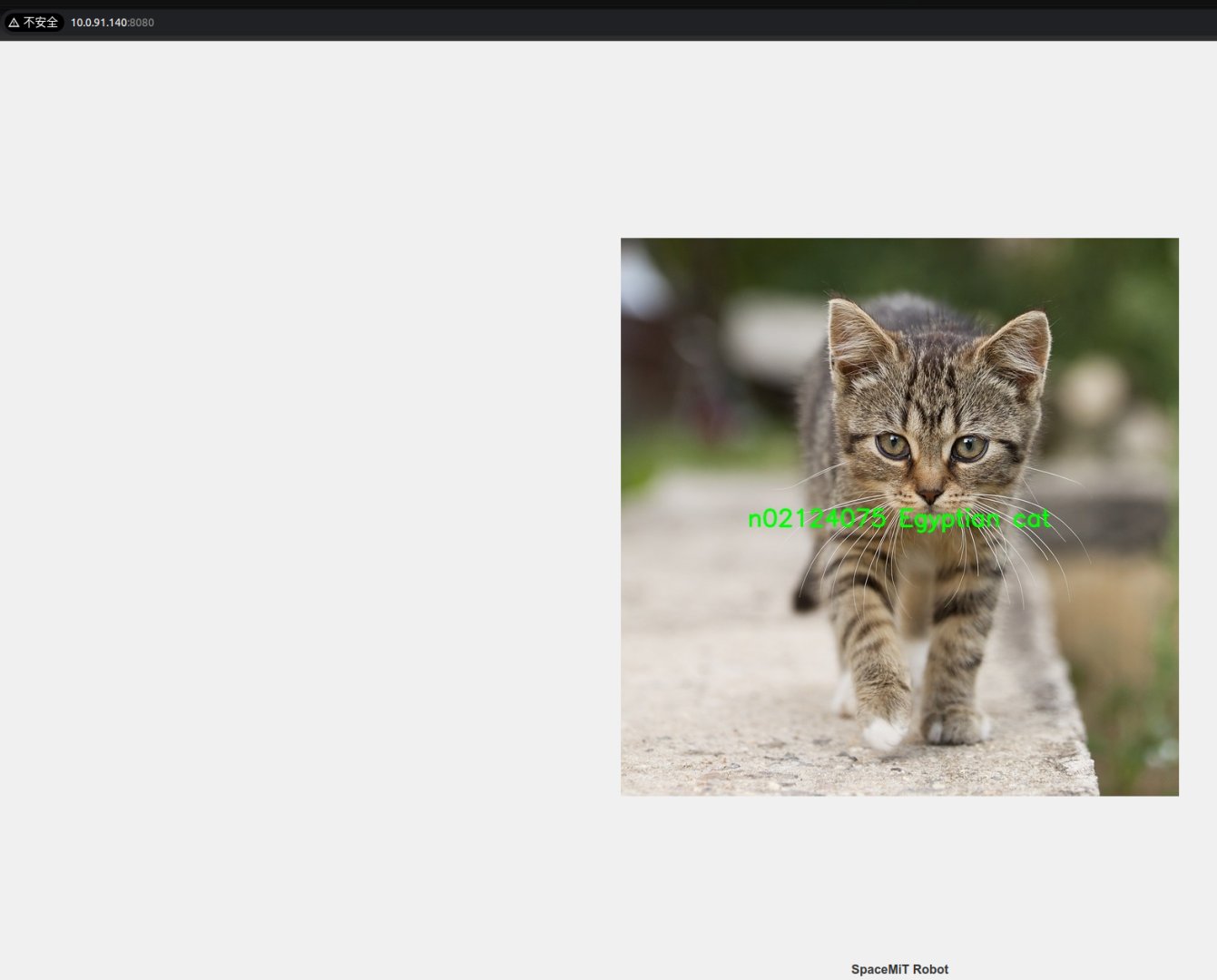

Viewing Inference Results in a Web Browser

Terminal 1 – Run the inference node:

ros2 launch br_perception infer_img.launch.py \

config_path:='config/classification/mobilenet_v2.yaml' \

img_path:='./kitten.jpg' \

publish_result_img:=true \

result_img_topic:='result_img' \

result_topic:='/inference_result'

Sample output:

[INFO] [launch]: All log files can be found below /home/zq-pi3/.ros/log/2025-04-28-14-23-22-474545-spacemit-k1-x-MUSE-Pi-board-528137

[INFO] [launch]: Default logging verbosity is set to INFO

[INFO] [infer_img_node-1]: process started with pid [528138]

[infer_img_node-1] class predict: n02124075 Egyptian cat

[infer_img_node-1] The image inference results are published cyclically

[infer_img_node-1] The image inference results are published cyclically

The prefix n02124075 is the ImageNet class index

Terminal 2 – Launch the WebSocket viewer:

ros2 launch br_visualization websocket_cpp.launch.py image_topic:='/result_img'

Sample output (WebSocket viewer):

[INFO] [launch]: Default logging verbosity is set to INFO

[INFO] [websocket_cpp_node-1]: process started with pid [276081]

[websocket_cpp_node-1] Please visit in your browser: 10.0.91.140:8080

[websocket_cpp_node-1] [INFO] [1745548855.705411901] [websocket_cpp_node]: WebSocket Stream Node has started.

[websocket_cpp_node-1] [INFO] [1745548855.706897013] [websocket_cpp_node]: Server running on http://0.0.0.0:8080

[websocket_cpp_node-1] [INFO] [1745548856.281858684] [websocket_cpp_node]: WebSocket client connected.

Note: Visit the IP shown after "Please visit in your browser:" (here 10.0.91.140:8080) to view inference results. The IP may differ depending on your network.

Viewing Raw Inference Results

As mentioned above, the prefix n02124075 is the ImageNet class ID.

The parameter result_topic:='/inference_result' specifies the ROS 2 topic where the inference results are published. You can view these results by running:

ros2 topic echo /inference_result

Example output:

data: n02124075 Egyptian cat

---

- The message type is

std_msgs/msg/String. - Press Ctrl + C in the terminal to stop the running inference node.

ros2 launch br_perception infer_img.launch.py \

config_path:='config/classification/mobilenet_v2.yaml' \

img_path:='./kitten.jpg' \

publish_result_img:=true \

result_img_topic:='result_img' \

result_topic:='/inference_result'

To test with another image, simply update the img_path parameter. The web interface will automatically refresh with the new inference result.

Video Stream Inference

# Load ROS2 environment

source /opt/bros/humble/setup.bash

Start the USB Camera

ros2 launch br_sensors usb_cam.launch.py video_device:="/dev/video20"

Option 1: Inference with Web Visualization

Terminal 1 — Run inference:

ros2 launch br_perception infer_video.launch.py \

config_path:='config/classification/mobilenet_v2.yaml' \

sub_image_topic:='/image_raw' \

publish_result_img:='true' \

result_topic:='/inference_result'

Terminal 2 — Start WebSocket visualization:

ros2 launch br_visualization websocket_cpp.launch.py image_topic:='/result_img'

Terminal Output Example:

[INFO] [launch]: Default logging verbosity is set to INFO

[INFO] [websocket_cpp_node-1]: process started with pid [276081]

[websocket_cpp_node-1] Please visit in your browser: 10.0.91.140:8080

[websocket_cpp_node-1] [INFO] [1745548855.705411901] [websocket_cpp_node]: WebSocket Stream Node has started.

[websocket_cpp_node-1] [INFO] [1745548855.706897013] [websocket_cpp_node]: Server running on http://0.0.0.0:8080

[websocket_cpp_node-1] [INFO] [1745548856.281858684] [websocket_cpp_node]: WebSocket client connected.

Note: Visit the IP shown after "Please visit in your browser:" (here 10.0.91.140:8080) to view inference results. The IP may differ depending on your network.

Option 2: Inference without Visualization (Data Only)

If you only want the raw inference results, run:

ros2 launch br_perception infer_video.launch.py \

config_path:='config/classification/mobilenet_v2.yaml' \

sub_image_topic:='/image_raw' \

publish_result_img:='false' \

result_topic:='/inference_result'

Print /inference_result topic:

ros2 topic echo /inference_result

data: n03788365 mosquito net

---

The message format is the standard ROS 2 string: std_msgs/msg/String.

infer_video.launch.py Parameters

| Parameter Name | Description | Default Value |

|---|---|---|

config_path | Path to the model configuration file for inference | config/detection/yolov6.yaml |

sub_image_topic | Image topic to subscribe to | /image_raw |

publish_result_img | Whether to publish the rendered inference image | false |

result_img_topic | Image topic to publish (only when publish_result_img=true) | /result_img |

result_topic | Inference-result topic to publish | /inference_result |