UNet

Last Version: 12/09/2025

UNet Overview

This guide demonstrates efficient image segmentation using the lightweight UNet semantic segmentation model with the SpacemiT AI processor.

The model accepts static images or video streams as input and outputs pixel-level semantic labels (grayscale) and pseudo-color renderings, which are published via ROS 2 for downstream perception or control modules.

The model is trained on the Cityscapes dataset and deployed in ONNX format, supporting accurate segmentation of typical urban scene elements such as people, vehicles, roads, and traffic signs.

Typical applications for UNet include:

- Autonomous driving perception (road/target segmentation)

- Urban street scene analysis and digital map construction

- Remote sensing land-use and object classification

- Medical image segmentation (e.g., organs or lesions)

- Industrial defect detection and preprocessing

Environment Setup

Install Dependencies

sudo apt install python3-opencv ros-humble-cv-bridge ros-humble-camera-info-manager \

ros-humble-image-transport python3-spacemit-ort

Load ROS 2 Environment

source /opt/bros/humble/setup.bash

Check Supported Segmentation Models

Run the following command to view available segmentation model configurations:

ros2 launch br_perception infer_info.launch.py | grep 'segmentation'

Example output:

- config/segmentation/unet.yaml

Image Inference

Prepare Input Image

cp /opt/bros/humble/share/jobot_infer_py/data/segmentation/test_unet.jpg .

Run Inference and Save Results

ros2 launch br_perception infer_img.launch.py \

config_path:='config/segmentation/unet.yaml' \

img_path:='./test_unet.jpg'

Example terminal output:

[INFO] [launch]: All log files can be found below /home/zq-pi/.ros/log/2025-05-26-09-45-18-005008-spacemit-k1-x-MUSE-Pi-board-5995

[INFO] [launch]: Default logging verbosity is set to INFO

[INFO] [infer_img_node-1]: process started with pid [5996]

[infer_img_node-1] Inference time: 4665.87 ms

[infer_img_node-1] The semantic segmentation results are saved in: seg_result.jpg

[infer_img_node-1] The semantic segmentation pseudo-color image is saved to seg_pseudo_color.png

[INFO] [infer_img_node-1]: process has finished cleanly [pid 5996]

Output files:

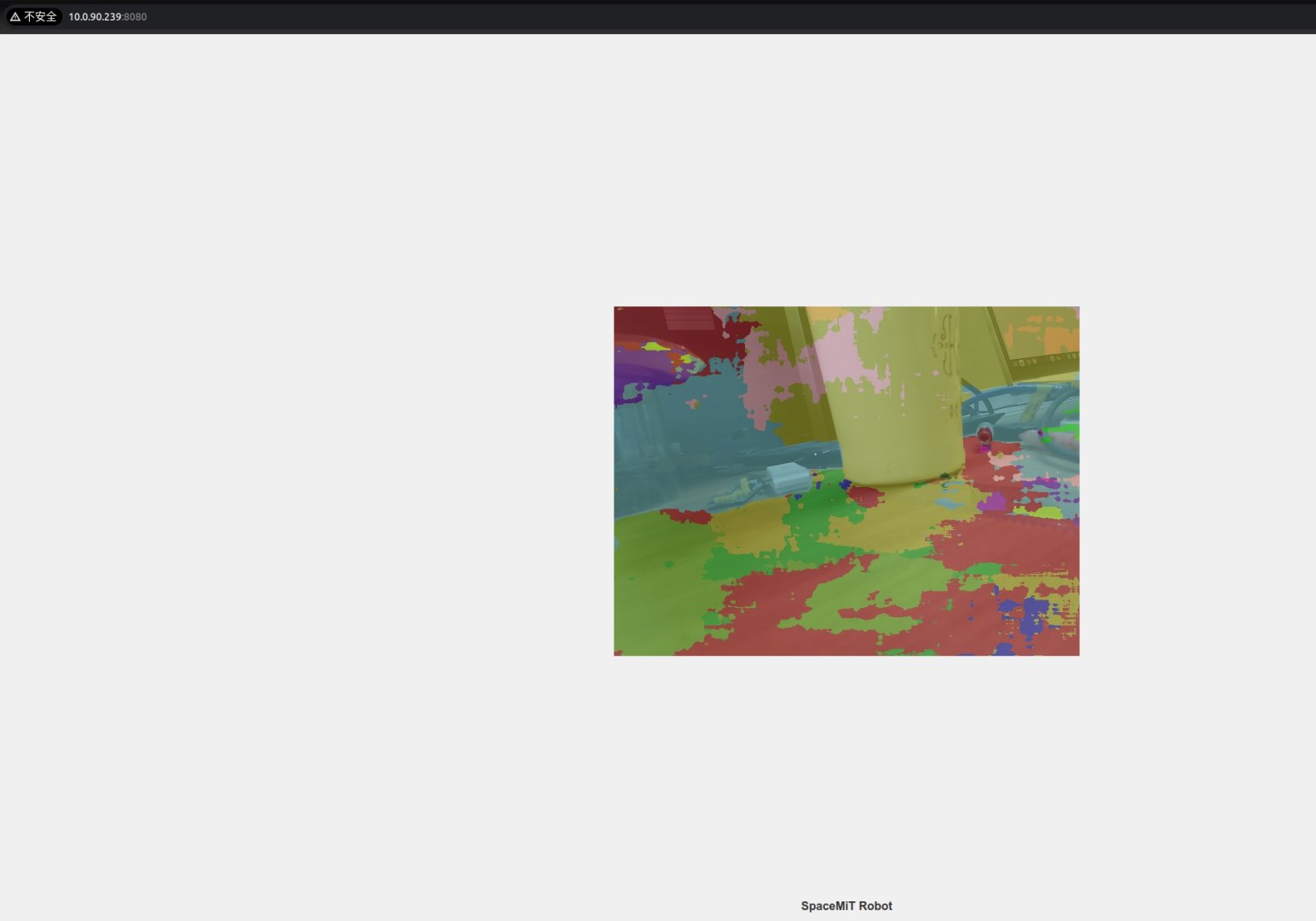

seg_result.jpg: Overlay of input image with predicted segmentationseg_pseudo_color.png: Pseudo-color map with semantic classes in color

Example images:

| Original Image | Segmentation Overlay | Pseudo-Color Map |

|---|---|---|

|  |  |

Web Visualization of Inference

Terminal 1 — Launch inference with image publishing:

ros2 launch br_perception infer_img.launch.py \

config_path:='config/segmentation/unet.yaml' \

img_path:='./test_unet.jpg' \

publish_result_img:=true \

result_img_topic:='result_img' \

result_topic:='/inference_result'

Example output:

[INFO] [launch]: All log files can be found below /home/zq-pi/.ros/log/2025-05-26-10-05-41-203368-spacemit-k1-x-MUSE-Pi-board-7077

[INFO] [launch]: Default logging verbosity is set to INFO

[INFO] [infer_img_node-1]: process started with pid [7084]

[infer_img_node-1] Inference time: 4712.77 ms

[infer_img_node-1] The image inference results are published cyclically

[infer_img_node-1] The image inference results are published cyclically

[infer_img_node-1] The image inference results are published cyclically

Terminal 2 — Start WebSocket visualization:

ros2 launch br_visualization websocket_cpp.launch.py image_topic:='/result_img'

Visit the provided URL in your browser (http://<IP>:8080) to view the segmentation results.

Message Subscription and Monitoring

The topic result_topic:='/inference_result' publishes the inference results. You can view the data using:

ros2 topic echo /inference_result

Example output:

header:

stamp:

sec: 0

nanosec: 0

frame_id: ''

height: 480

width: 640

encoding: mono8

is_bigendian: 0

step: 640

data:

- 2

- 2

- 2

- 2

.....

The message uses the standard Image format. The encoding is mono8, representing a single-channel grayscale image, where each pixel value corresponds to the class index of the segmented object.

Press Ctrl + C to stop the following command:

ros2 launch br_perception infer_img.launch.py config_path:='config/segmentation/unet.yaml' img_path:='./test_unet.jpg' publish_result_img:=true result_img_topic:='result_img' result_topic:='/inference_result'

You can replace img_path with a different image to perform inference on other inputs. The results on the web interface will update automatically.

infer_img.launch.py Parameters

| Parameter | Description | Default |

|---|---|---|

config_path | Path to the model configuration file used for inference | config/detection/yolov6.yaml |

img_path | Path to the image file to run inference on | data/detection/test.jpg |

publish_result_img | Whether to publish the detection result as an image message | false |

result_img_topic | Topic name for rendered image output (only valid if publish_result_img = true) | /result_img |

result_topic | Topic name for the inference result message | /inference_result |

Video Stream Inference

Start Camera Device

ros2 launch br_sensors usb_cam.launch.py video_device:="/dev/video20"

Start Video Segmentation

Terminal 1 — Launch video stream segmentation:

ros2 launch br_perception infer_video.launch.py \

config_path:='config/segmentation/unet.yaml' \

sub_image_topic:='/image_raw' \

publish_result_img:=true \

result_topic:='/inference_result'

Web Visualization

Terminal 2 — Start WebSocket visualization:

ros2 launch br_visualization websocket_cpp.launch.py image_topic:='/result_img'

Visit http://<IP>:8080 in your browser to view real-time segmentation.

Raw Inference Results (No Visualization)

To have the grayscale semantic labels without web visualization:

ros2 launch br_perception infer_video.launch.py \

config_path:='config/segmentation/unet.yaml' \

sub_image_topic:='/image_raw' \

publish_result_img:=false \

result_topic:='/inference_result'

You can view the data using:

ros2 topic echo /inference_result

Example output:

header:

stamp:

sec: 0

nanosec: 0

frame_id: ''

height: 480

width: 640

encoding: mono8

is_bigendian: 0

step: 640

data:

- 2

- 2

- 2

- 2

.....

The message uses the standard Image format. The encoding is mono8, representing a single-channel grayscale image, where each pixel value corresponds to the class index of the segmented object.

infer_video.launch.py Parameters

| Parameter Name | Description | Default Value |

|---|---|---|

config_path | Path to the model configuration file for inference | config/detection/yolov6.yaml |

sub_image_topic | Image topic to subscribe to | /image_raw |

publish_result_img | Whether to publish the rendered inference image | false |

result_img_topic | Image topic to publish (only when publish_result_img=true) | /result_img |

result_topic | Inference-result topic to publish | /inference_result |