4.3 C++ Inference Example

Last Version: 11/09/2025

This section demonstrates how to perform model inference in a C++ environment using an object detection task as an example. Object detection identifies both the location and category of objects in an image or video.

We use the YOLOv5 model running on the ONNX Runtime framework with SpacemiT hardware acceleration.

Clone the Code

git clone https://gitee.com/bianbu/spacemit-demo.git ~

Build and Run

cd spacemit_demo/examples/CV/yolov5/cpp

mkdir build

cd build

cmake ..

./yolov5_demo --model ../../model/yolov5_n.q.onnx --image ../../data/test.jpg

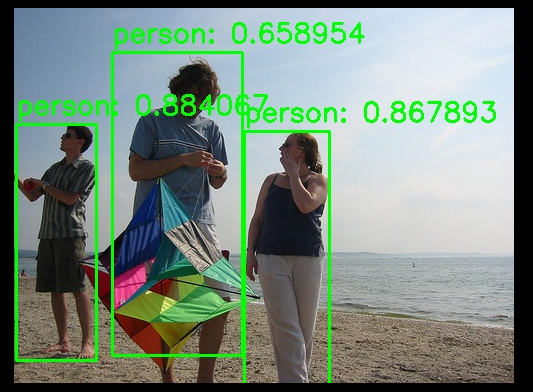

The inference results are saved as result.jpg by default, Example detection result showing bounding boxes:

Inference Process and Code Walkthrough

Header File Imports

#include <opencv2/opencv.hpp>

#include <onnxruntime_cxx_api.h>

#include "spacemit_ort_env.h"

Open Camera

// Device ID Defaults to 1

cv::VideoCapture cap(1);

if (!cap.isOpened()) {

std::cerr << "Failed to open camera!" << std::endl;

return -1;

}

Preprocess the Input Image

// Image Preprocessing Function

std::vector<float> preprocess(const cv::Mat& image, int inputWidth = 320, int inputHeight = 320) {

cv::Mat resizedImage;

// Image Resize to Fixed Size

cv::resize(image, resizedImage, cv::Size(inputWidth, inputHeight));

// Image Channel Conversion,BGR->RGB

cv::cvtColor(resizedImage, resizedImage, cv::COLOR_BGR2RGB);

// Image Normalization

resizedImage.convertTo(resizedImage, CV_32F, 1.0 / 255.0);

std::vector<float> inputTensor;

// Convert Image Channel Order to [C, H, W]

for (int c = 0; c < 3; ++c) {

for (int h = 0; h < inputHeight; ++h) {

for (int w = 0; w < inputWidth; ++w) {

inputTensor.push_back(resizedImage.at<cv::Vec3f>(h, w)[c]);

}

}

}

return inputTensor;

}

Session Initialization and Pre-configuration

// Initialize ONNX Runtime Environment

Ort::Env env(ORT_LOGGING_LEVEL_WARNING, "YOLOv5Inference");

Ort::SessionOptions session_options;

// Set Runtime Thread Count, Maximum 4

session_options.SetIntraOpNumThreads(4)

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

// Load SpaceMIT EP

SessionOptionsSpaceMITEnvInit(session_options);

// Load ONNX Model

Ort::Session session_(env, modelPath.c_str(), session_options);

// Get Input Node Names

Ort::AllocatorWithDefaultOptions allocator;

std::vector<const char*> input_node_names_;

std::vector<std::string> input_names_;

size_t num_inputs_;

num_inputs_ = session_.GetInputCount();

input_node_names_.resize(num_inputs_);

input_names_.resize(num_inputs_, "");

for (size_t i = 0; i < num_inputs_; ++i) {

auto input_name = session_.GetInputNameAllocated(i, allocator);

input_names_[i].append(input_name.get());

input_node_names_[i] = input_names_[i].c_str();

}

// Get Input Width and Height

Ort::TypeInfo input_type_info = session_.GetInputTypeInfo(0);

auto input_tensor_info = input_type_info.GetTensorTypeAndShapeInfo();

std::vector<int64_t> input_dims = input_tensor_info.GetShape();

int inputWidth = input_dims[3];

int inputHeight = input_dims[2];

// Get Output Node Names

std::vector<const char*> output_node_names_;

std::vector<std::string> output_names_;

size_t num_outputs_;

num_outputs_ = session_.GetOutputCount();

output_node_names_.resize(num_outputs_);

output_names_.resize(num_outputs_, "");

for (size_t i = 0; i < num_outputs_; ++i) {

auto output_name = session_.GetOutputNameAllocated(i, allocator);

output_names_[i].append(output_name.get());

output_node_names_[i] = output_names_[i].c_str();

}

// Run Image preprocess

std::vector<float> inputTensor = preprocess(frame, inputWidth, inputHeight);

// Create Input Tensor

auto memory_info = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value input_tensor = Ort::Value::CreateTensor<float>(memory_info, inputTensor.data(), inputTensor.size(), input_shape.data(), input_shape.size());

Run Inference

std::vector<Ort::Value> outputs = session_.Run(Ort::RunOptions{nullptr}, input_node_names_.data(), &input_tensor, 1, output_node_names_.data(), output_node_names_.size());

Get Output and Postprocess

// Get Output Data

float* dets_data = outputs[0].GetTensorMutableData<float>();

float* labels_pred_data = outputs[1].GetTensorMutableData<float>();

// Get Number of Detected Objects

auto dets_tensor_info = outputs[0].GetTensorTypeAndShapeInfo();

std::vector<int64_t> dets_dims = dets_tensor_info.GetShape();

size_t num_detections = dets_dims[1];

// Get Coordinates, Scores, and Labels Separately

std::vector<std::vector<float>> dets(num_detections, std::vector<float>(4));

std::vector<float> scores(num_detections);

std::vector<int> labels_pred(num_detections);

for (size_t i = 0; i < num_detections; ++i) {

for (int j = 0; j < 4; ++j) {

dets[i][j] = dets_data[i * 5 + j];

}

scores[i] = dets_data[i * 5 + 4];

labels_pred[i] = static_cast<int>(labels_pred_data[i]);

}

// Adjust Bounding Boxes to Original Image Dimensions

float scale_x = static_cast<float>(image_shape.width) / inputWidth;

float scale_y = static_cast<float>(image_shape.height) / inputHeight;

for (auto& det : dets) {

det[0] *= scale_x;

det[1] *= scale_y;

det[2] *= scale_x;

det[3] *= scale_y;

}

Visualize Results

void visualizeResults(cv::Mat& image, const std::vector<std::vector<float>>& dets, const std::vector<float>& scores, const std::vector<int>& labels_pred, const std::vector<std::string>& labels, float conf_threshold = 0.4) {

for (size_t i = 0; i < dets.size(); ++i) {

const auto& det = dets[i];

float score = scores[i];

if (score > conf_threshold) {

int class_id = labels_pred[i];

int x1 = static_cast<int>(det[0]);

int y1 = static_cast<int>(det[1]);

int x2 = static_cast<int>(det[2]);

int y2 = static_cast<int>(det[3]);

std::string label = labels[class_id];

cv::rectangle(image, cv::Point(x1, y1), cv::Point(x2, y2), cv::Scalar(0, 255, 0), 2);

cv::putText(image, label + ": " + std::to_string(score), cv::Point(x1, y1 - 10), cv::FONT_HERSHEY_SIMPLEX, 0.9, cv::Scalar(0, 255, 0), 2);

}

}

}